on Note

Reference

Basic Function

- MPI_Init, MPI_Comm_rank, MPI_Comm_size, MPI_Abort, MPI_Finalize

- MPI_Send, MPI_Recv

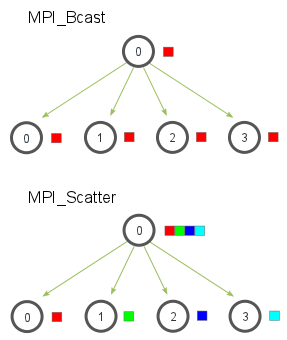

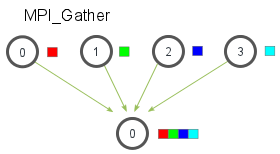

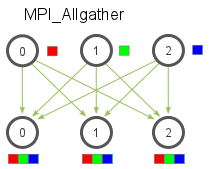

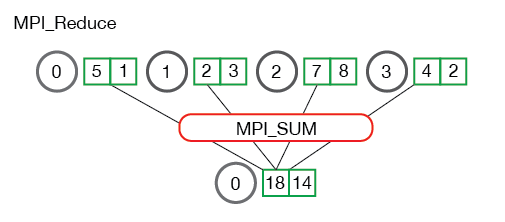

- MPI_Bcast, MPI_Scatter, MPI_Gather, MPI_Reduce

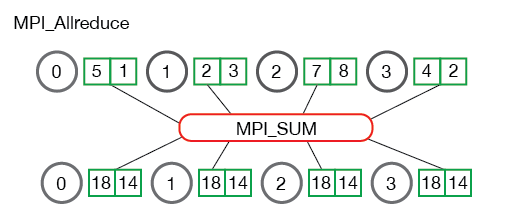

- MPI_Allgather, MPI_Allreduce

Attention

- MPI_IN_PLACE, 指定输入输出buffer相同

MPI_Allreduce(MPI_IN_PLACE, pData, (int) nData, GetDataType(pData), MPI_SUM, Communicator())

Simple Example

#include <mpi.h>

#include <stdio.h>

#include <stdlib.h>

int main(int argc, char** argv) {

// Initialize the MPI environment

MPI_Init(NULL, NULL);

// Find out rank, size

int world_rank;

MPI_Comm_rank(MPI_COMM_WORLD, &world_rank);

int world_size;

MPI_Comm_size(MPI_COMM_WORLD, &world_size);

// We are assuming at least 2 processes for this task

if (world_size < 2) {

fprintf(stderr, "World size must be greater than 1 for %sn", argv[0]);

MPI_Abort(MPI_COMM_WORLD, 1);

}

int number;

if (world_rank == 0) {

// If we are rank 0, set the number to -1 and send it to process 1

number = -1;

MPI_Send(&number, 1, MPI_INT, 1, 0, MPI_COMM_WORLD);

} else if (world_rank == 1) {

MPI_Recv(&number, 1, MPI_INT, 0, 0, MPI_COMM_WORLD, MPI_STATUS_IGNORE);

printf("Process 1 received number %d from process 0n", number);

}

MPI_Finalize();

}

异步编程

//MPI_Request send_req_, recv_req_;

//MPI_Status send_status_, recv_status_;

void NnetMpiSync::SyncTest() {

int s = 1, r = 2;

MPI_Isend(&s, 1, MPI_INT, peer_, 2, MPI_COMM_WORLD, &send_req_);

MPI_Irecv(&r, 1, MPI_INT, peer_, 2, MPI_COMM_WORLD, &recv_req_);

MPI_Wait(&send_req_, &send_status_);

MPI_Wait(&recv_req_, &recv_status_);

std::cout << "rank " << rank_ << " " << s << " " << r

<< std::endl << std::flush;

}

Function Graph

MPI_Bcast & MPI_Scatter

MPI_Gather & MPI_Allgather

MPI_Reduce & MPI_Allreduce

近期评论