1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

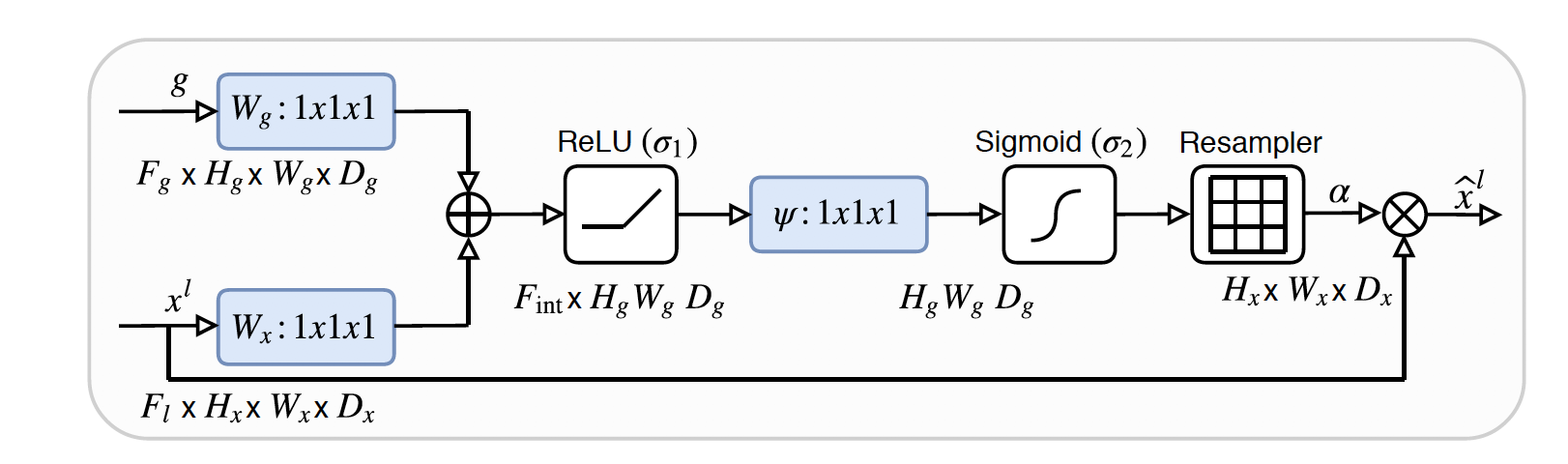

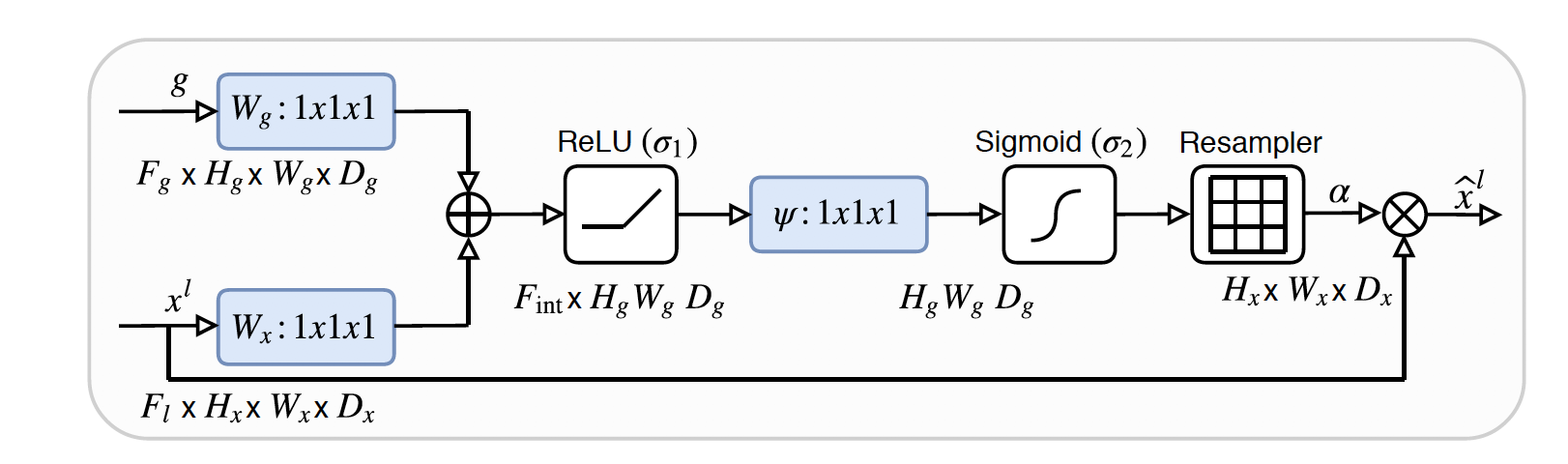

class (nn.Module):

def __init__(self,F_g,F_l,F_int):

super(Attention_block,self).__init__()

self.W_g = nn.Sequential(

nn.Conv2d(F_g, F_int, kernel_size=1,stride=1,padding=0,bias=True),

nn.BatchNorm2d(F_int)

)

self.W_x = nn.Sequential(

nn.Conv2d(F_l, F_int, kernel_size=1,stride=1,padding=0,bias=True),

nn.BatchNorm2d(F_int)

)

self.psi = nn.Sequential(

nn.Conv2d(F_int, 1, kernel_size=1,stride=1,padding=0,bias=True),

nn.BatchNorm2d(1),

nn.Sigmoid()

)

self.relu = nn.ReLU(inplace=True)

def forward(self,g,x):

g1 = self.W_g(g)

x1 = self.W_x(x)

psi = self.relu(g1+x1)

psi = self.psi(psi)

return x*psi

|

近期评论