There is minor difference between Deep Q Neural Network(DQN) and Policy Gradient, could we combine these two algorithms? The answer is Yes. That is exactly Actor-Critic. However, it may be difficult for Actor-Critic to learn something due to the complexity of its mechanism and its quick updating at every timestamp. Fortunately, Google Deep Mind proposes a elegant strategy, famous deep deterministic policy gradient(DDPG),to solve this problem. Here I will briefly compare DQN and Policy Gradient and introduce DDPG.

Comparsion of DQN and Policy Gradient

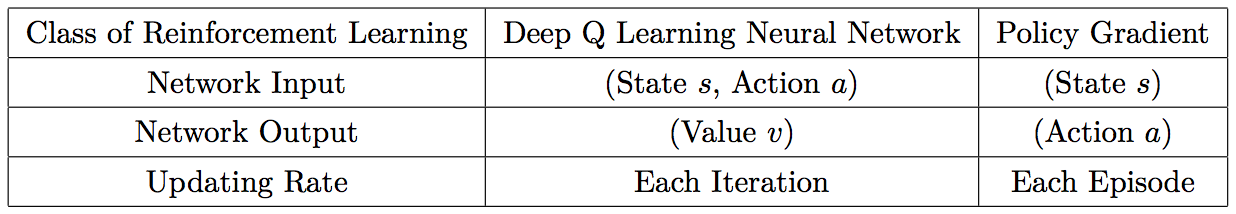

The difference of DQN and Policy Gradient is described in Fig. 1. Reward s is used to compute the output value v. In DQN, computing value v requires one time step but needs one episode to compute value v in Policy Grdient. This is why DQN update faster than Policy Gradient. But could we use the value v computed through DQN and update each iteration in Policy Gradient? Here comes the Actor Critic. Briefly, DQN functions like a critic who evaluate (state s, action a) with value v and Policy Gradient functions like a actor who takes action a in state s. Therefore, DQN can tell Policy Gradient the Value v at each step while Policy Gradient can tell DQN which action a is supposed to take at state s. Everything matches perfectly at this time.

近期评论