TITLE: Pyramid Scene Parsing Network

AUTHOR: Hengshuang Zhao, Jianping Shi, Xiaojuan Qi, Xiaogang Wang, Jiaya Jia

ASSOCIATION: Chinese University of Hongkong, SenseTime

FROM: arXiv:1612.01105

CONTRIBUTIONS

- A pyramid scene parsing network is proposed to embed difficult scenery context features in an FCN based pixel prediction framework.

- An effective optimization strategy is developped for deep ResNet based on deeply supervised loss.

- A practical system is built for state-of-the-art scene parsing and semantic segmentation where all crucial implementation details are included.

METHOD

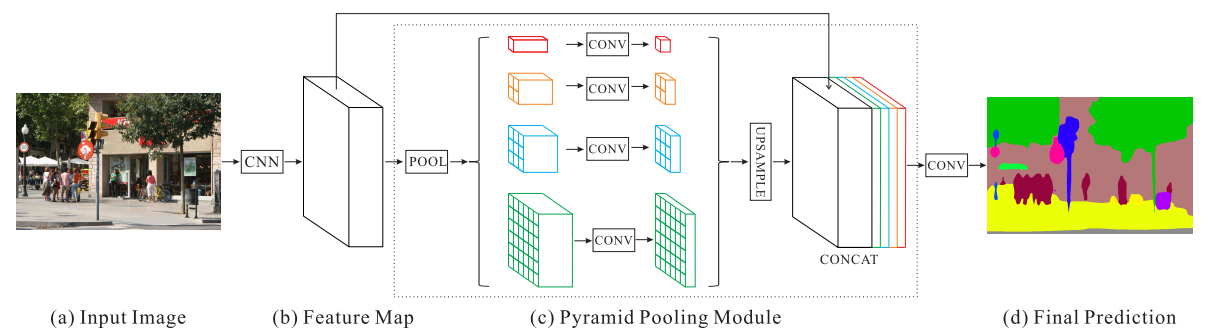

The framework of PSPNet (Pyramid Scene Parsing Network) is illustrated in the following figure.

Important Observations

There are mainly three observations that motivated the authors to propose pyramid pooling module as the effective global context prior.

- Mismatched Relationship Context relationship is universal and important especially for complex scene understanding. There exist co-occurrent visual patterns. For example, an airplane is likely to be in runway or fly in sky while not over a road.

- Confusion Categories Similar or confusion categories should be excluded so that the whole object is covered by sole label, but not multiple labes. This problem can be remedied by utilizing the relationship between categories.

- Inconspicuous Classes To improve performance for remarkably small or large objects, one should pay much attention to different sub-regions that contain inconspicuous-category stuff.

Pyramid Pooling Module

The pyramid pooling module fuses features under four different pyramid scales. The coarsest level highlighted in red is global pooling to generate a single bin output. The following pyramid level separates the feature map into different sub-regions and forms pooled representation for different locations. The output of different levels in the pyramid pooling module contains the feature map with varied sizes. To maintain the weight of global feature, 1×1 convolution layer is used after each pyramid level to reduce the dimension of context representation to 1/N of the original one if the level size of pyramid is N. Then the low-dimension feature maps are directly upsampled to get the same size feature as the original feature map via bilinear interpolation. Finally, different levels of features are concatenated as the final pyramid pooling global feature.

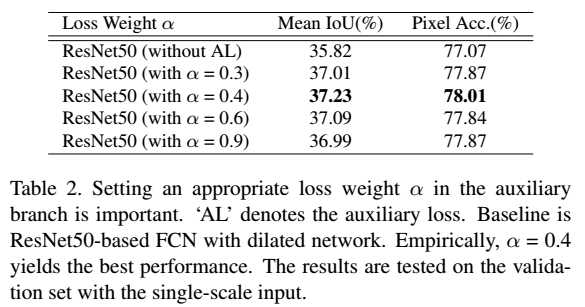

Deep Supervision for ResNet-Based FCN

Apart from the main branch using softmax loss to train the final classifier, another classifier is applied after the fourth stage. The auxiliary loss helps optimize the learning process, while the master branch loss takes the most responsibility.

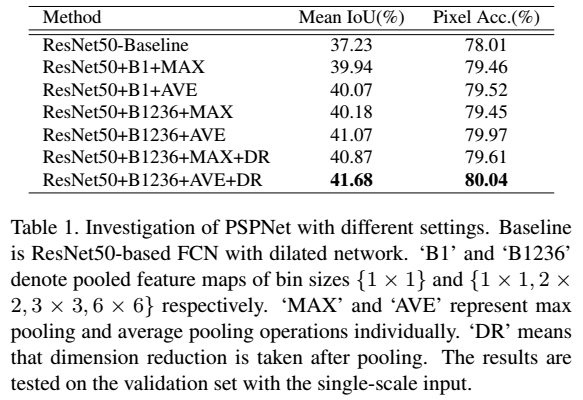

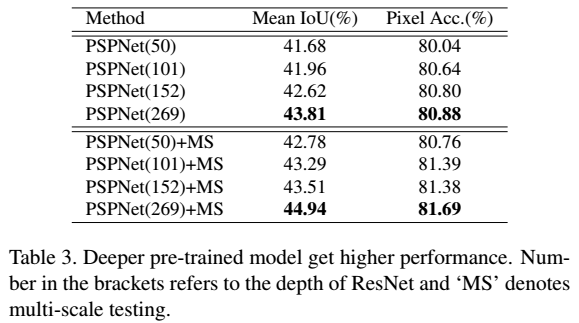

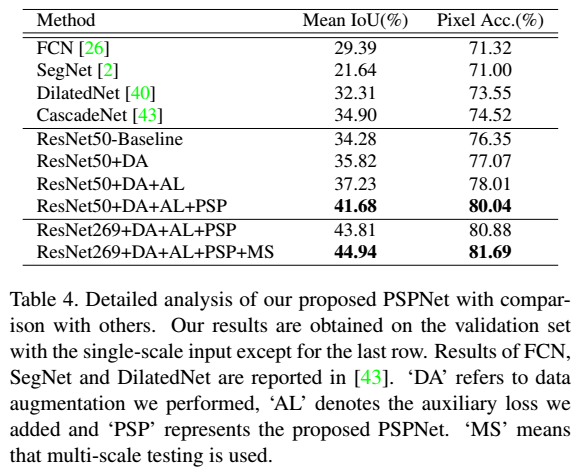

Ablation Study

近期评论